Quick Revision for Multimedia and Animation - Vikatu

Unit 1

Multimedia is a type of

medium that allows information to be easily transferred from one location to

another. Multimedia is the presentation of text, pictures, audio, and video with links and tools that allow the user to

navigate, engage, create, and communicate using a computer. Multimedia refers

to the computer-assisted integration of text, drawings, still and moving

images(videos) graphics, audio, animation, and any other media in which any

type of information can be expressed, stored, communicated, and processed

digitally.

Multimedia is being employed in a variety of disciplines,

including education, training, and business.

Applications of Multimedia

Multimedia indicates that,

in addition to text, graphics/drawings,

and photographs, computer information can be

represented using audio, video, and animation. Multimedia is used in:

1. Education

In the subject of education, multimedia is becoming

so popular. It is often used to produce study materials for pupils and to

ensure that they have a thorough comprehension of various disciplines.

Edutainment, which combines education and entertainment, has become highly

popular in recent years. This system gives learning in the form of enjoyment to

the user.

2. Entertainment

The usage of multimedia in films creates a unique

auditory and video impression. Today, multimedia has completely transformed the

art of filmmaking around the world. Multimedia is the only way to achieve

difficult effects and actions.

The entertainment sector makes extensive use of multimedia. It’s particularly

useful for creating special effects in films and video games. The most visible

illustration of the emergence of multimedia in entertainment is music and video

apps. Interactive games become possible thanks to the use of multimedia in the

gaming business. Video games are more interesting because of the integrated

audio and visual effects.

3. Business

Marketing, advertising, product demos,

presentation, training, networked communication, etc, are applications of

multimedia that are helpful in many businesses. The audience can quickly

understand an idea when multimedia presentations are used. It gives a simple

and effective technique to attract visitors’ attention and effectively conveys

information about numerous products. It’s also utilized to encourage clients to

buy things in business marketing.

4. Technology & Science:

In the sphere of science and technology, multimedia

has a wide range of applications. It can communicate audio, films, and other

multimedia documents in a variety of formats. Only multimedia can make live

broadcasting from one location to another possible.

It is beneficial to surgeons because they can rehearse intricate procedures

such as brain removal and reconstructive surgery using images made from imaging

scans of the human body. Plans can be produced more efficiently to cut expenses

and problems.

5. Fine Arts:

Multimedia artists work in the fine arts, combining

approaches employing many media and incorporating viewer involvement in some

form. For example, a variety of digital mediums can be used to combine movies

and operas.

Digital artist is a new word for these types of artists. Digital painters make

digital paintings, matte paintings, and vector graphics of many varieties using

computer applications.

6. Engineering

Multimedia is frequently used by software engineers

in computer simulations for military or industrial training. It’s also used for

software interfaces created by creative experts and software engineers in

partnership. Only multimedia is used to perform all the minute calculations.

Components of Multimedia

Multimedia consists of the following 5 components:

1. Text

Characters are used to form words, phrases, and

paragraphs in the text. Text appears in all multimedia creations of some kind.

The text can be in a variety of fonts and sizes to match the multimedia

software’s professional presentation. Text in multimedia systems can

communicate specific information or serve as a supplement to the information

provided by the other media.

2. Graphics

Non-text information, such as a sketch, chart, or

photograph, is represented digitally. Graphics add to the appeal of the

multimedia application. In many circumstances, people dislike reading big

amounts of material on computers. As a result, pictures are more frequently

used than words to clarify concepts, offer background information, and so on.

Graphics are at the heart of any multimedia presentation. The use of visuals in

multimedia enhances the effectiveness and presentation of the concept. Windows

Picture, Internet Explorer, and other similar programs are often used to see

visuals. Adobe Photoshop is a popular graphics editing program that allows you

to effortlessly change graphics and make them more effective and appealing.

3. Animations

A sequence of still photographs is being flipped

through. It’s a set of visuals that give the impression of movement. Animation

is the process of making a still image appear to move. A presentation can also

be made lighter and more appealing by using animation. In multimedia

applications, the animation is quite popular. The following are some of the

most regularly used animation viewing programs: Fax Viewer, Internet Explorer,

etc.

4. Video

Photographic images that appear to be in full

motion and are played back at speeds of 15 to 30 frames per second. The term

video refers to a moving image that is accompanied by sound, such as a

television picture. Of course, text can be included in videos, either as

captioning for spoken words or as text embedded in an image, as in a slide

presentation. The following programs are widely used to view videos: Real

Player, Window Media Player, etc.

5. Audio

Any sound, whether it’s music, conversation, or

something else. Sound is the most serious aspect of multimedia, delivering the

joy of music, special effects, and other forms of entertainment. Decibels are a

unit of measurement for volume and sound pressure level. Audio files are used

as part of the application context as well as to enhance interaction. Audio

files must occasionally be distributed using plug-in media players when they

appear within online applications and webpages. MP3, WMA, Wave, MIDI, and RealAudio

are examples of audio formats. The following programs are widely used to view

videos: Real Player, Window Media Player, etc.

steps to making the perfect multimedia presentation

For

a static presentation, you would probably load up PowerPoint, Google Slides, or

Keynote and be ready to go. With media elements, however, you’ll have to think

outside the box.

If

you’re incorporating audio, video, animations, or anything else, you’ll

have to find it somewhere. If you want to make it yourself, you’ll need the

tools for it, and some design agencies are better for multimedia than others.

To

help, here are a few award winning presentation software's to consider:

PowerPoint/Google Slides/Keynote

Let’s start with the basics. Each of these classic

presentation tools is quite powerful. They can be used to put together

excellent multimedia presentations.

One

of the classic rookie mistakes in presentations is carefully outlining your

content, but not paying attention to your imagery. Details from even your line

shape need to look professional. Presentation design is a crucial step that

shouldn’t be overlooked. If you happen to have a lot of design know-how, you

can do this yourself. Otherwise, you’re left with three options:

- Use a free template

- Use a paid template

- Hire a professional

(freelancer, agency, or design service)

Color schemes

Ensure

that you’re properly using color theory when designing your slides.

For a business presentation, use colors that are part of your brand identity or

featured in your logo. Tools like Colors can help you generate full

color schemes.

Visual themes

Consider

expressing the message of your presentation with visual themes and metaphors.

For instance, if your message is aspirational, you can use space or mountain

imagery to signify shooting for the stars. If your business is cutting-edge,

circuits and sci-fi imagery can help convey a sense of futurism.

Dynamic imagery:

Pair

different fonts and employ all different types of slides. Consistency is

key, but every slide should be distinctive in some way to keep your audience

invested.

4) Prepare your media

Since

you’ve planned ahead, you probably have a good idea for what media you want to

include in your presentation. Now that your slides are designed, it’s a good

idea to get your multimedia elements ready so you can easily drop them in

during the editing process.

Narration: Pick out your favorite recording

software or DAW (digital audio workstation) and hop to it! For a

professional presentation, you want to make sure your audio is fairly high

quality. Use a large closet or other audio-friendly space for recording if you

don’t have an audio setup.

Music: You can use any music you want for an internal

presentation, but for a public conference, you should definitely seek out

some royalty-free audio.

Video: Whether you’re using pre-recorded or live video,

you’ll want to make sure you have the right setup. As with narration, you’ll

want high-quality sound, along with a decent camera. For live video, try to use

an area with a strong internet connection to ensure you don’t suffer technical

difficulties.

Interactive

elements: Creating these can be part of the slide design

process (for instance, if you’re incorporating a game into your presentation).

Creating these from scratch requires a great deal of technical know-how, but

you can also find lots of pre-made templates out there.

GIFs

and animations: These are also included in many templates,

since they’re such a vital part of creating a dynamic multimedia presentation.

You may consider using animations for clever transitions, to spice

up infographics, or just to add color to your slides.

6) Add your multimedia elements

Once

you’ve got everything laid out, it’s time to add the fun stuff. Keynote,

PowerPoint, and Google Slides all have accessible tools for adding multimedia

elements, as do the other software examples listed above.

While

preparing your media is a challenge, you’ll also have to spend some time

figuring out the best way to integrate them. Technical difficulties can be a

death sentence for any presentation, so you’ll want to prepare in advance to

ensure everything goes smoothly.

Typically,

you’ll be able to control what settings cause the media to play. For instance,

Google Slides lets you set elements to play automatically, manually, or with a

click. Regardless, you should be sure to preview your slideshow and make sure

that everything looks right and plays on cue.

Plain text files are often

made by the most basic text file format, which takes on the ".txt"

extension. These files are often created and edited by Notepad, the text editor

found on every Windows device, or by another text editor. However,

text files can be opened by virtually any document or text editor, including

more powerful applications such as Notepad++, Wordpad, Microsoft Office,

or OpenOffice.

Another place where you can

find plain text is input forms in websites and apps. Many social media

websites, such as Twitter and Instagram, only let you post captions and tweets

as plain text, although there are some exceptions, such as hashtags and emoji.

Therefore, you cannot add any additional formatting to these elements. They are

automatically formatted according to the standards of the website or app. Older

email clients also often have plain text modes, allowing you to send messages

in plain text.

The Benefits of Using Plain Text Files

Many people opt to use plain

text rather than rich text for most of their editing. This practice is

especially common among programmers and developers, who code in languages

constructed with plain text and are used to that environment.

Plain text is simple, easy

to read, and can be read and sent to anyone. It also has none of the device or

software compatibility issues that come with varying fonts. Those are just some

of the reasons why many people use text files over more powerful applications

like Word. There is even a large group of people who use plain text for

all text editing, from creating grocery lists to typing out full-length novels.

Another important use of

plain text files is that they form most of the underlying infrastructure behind

files and web pages. For example, ".ini" files used to keep

configurations for Windows applications are often stored in a plain text format.

This allows you to edit your settings by simply opening them up in Notepad.

Formatted text is text displayed in a special,

specified style. In computer applications, formatting data may be associated

with text data to create formatted text. How formatted text is created and

displayed is dependent on the operating system and application software used on

the computer.

Text formatting data may be qualitative (e.g.,

font family), or quantitative (e.g., font size, or color). It may

also indicate a style of emphasis (e.g., boldface, or italics), or a

style of notation (e.g., strikethrough, or superscript).

The purpose of formatted text is to enhance the

presentation of information. For example, in the previous paragraph, the

italicized words are each followed by examples. At a glance, the reader can

ascertain that there are four special words in the paragraph. The goal is to

help the reader to obtain, understand, and retain the information.

Unformatted

text

Unformatted

text is any text that is not associated with any formatting information. It is

plain text, containing only printable characters, white space, and line breaks.

What

is RTF?

RTF

stands for Rich Text Format. It is a format that allows you to save your

documents in a platform-independent way. RTF files can be opened and edited by

any word processor, such as Microsoft Word or OpenOffice Writer.

RTF

is a file format that allows documents to be saved in a text file format that

is both human and machine-readable. RTF files can be opened in any text editor,

and are often used for exchanging documents between different word-processing

applications. RTF files can also be opened in web browsers, but the formatting

may not be preserved.

One

downside of RTF files is that they don’t always transfer formatting correctly

when opened in different programs. For example, if you create an RTF file in

Microsoft Word and then open it in Google Docs, the formatting may not look

exactly the same.

What

is HTML?

The

preferred markup language for building online pages and web applications is

HTML. With HTML, you can create your own website. Your website can be

interactive by using JavaScript.

The

main difference between RTF and HTML is that RTF is a text-based format while

HTML is a markup language. HTML is used to create structural semantics for

text, such as headings, paragraphs, lists, links, quotes. In contrast, RTF only

supports basic text formatting features.

One

advantage of using HTML to format text is that the code is universal, so your

text will look the same no matter what program you open it in.

Differences

Between RTF and HTML

The

following table highlights the major differences between RTF and HTML −

|

Characteristics |

RTF |

HTML |

|

Founded |

RTF is a document format that was developed by

Microsoft in 1987. |

The World Wide Web's primary language is HTML,

and it was developed in 1990 by Tim Berners Lee |

|

Standard |

It is based on Standard Generalized Markup

Language (SGML) |

It is based on Hyper Text Markup Language (HTML) |

|

Extension |

RTF files can be saved by ".rtf" |

HTML files can be saved by ".html" |

|

Usage |

Used for storing files |

Used to share content |

|

Designed |

RTF is designed for creating formatted documents

that will be read on a screen or printed out on paper |

HTML is designed specifically for displaying

content on a web page. |

|

Support |

RTF supports less image types |

HTML supports a more image types |

|

Specifications |

RTF cannot embed audio and video |

HTML can embed audio and video |

Common

Text Preparation tools:

word

processing programs, such as MS word, word perfect, are useful in creating text

for title that are text intensive. once text is created in a word processing

program, it can easily be copied to a multimedia title.

if

the title is not text intensive, it may be more efficient to use graphics

programs such as CorelDraw, photoshop to create stylish text. both CorelDraw

and photoshop allow coloring the text set font, point size and type style and

various text effects can be applied. You can apply distorting and animation

effect.

font

packages can be purchased that provide a variety of specialized fonts. you can

use font editor ex- fontographer to create your own font or edit some font.

You

can use scanner with optical character reader program to capture the

desired text. you can download electronic file from internet to collect text

and you use object linking and embedding or cut copy paste to bring text.

Conversion

of text from one format to another format

convert

to .doc: open ms word and save as doc

convert

text to doc

convert

rtf

convert

rtf to doc

convert

doc to html: open ms word and choose file save as command from file menu then

select web page from save as type combo box.

What Does Object Linking and Embedding Mean?

Object linking and embedding (OLE) is a

Microsoft technology that facilitates the sharing of application data and

objects written in different formats from multiple sources. Linking establishes

a connection between two objects, and embedding facilitates application data

insertion.

An OLE object may display as an icon. Double

clicking the icon opens the associated object application or asks the user to

select an application for object editing.

Alternatively, an OLE object may display as

actual contents, such as a graph or chart. For example, an external application

chart, such as an Excel spreadsheet, may be inserted into a Word application.

When the chart is activated in the Word document, the chart’s user interface

loads, and the user is able to manipulate the external chart’s data inside the

Word document.

OLE-supported software applications include:

- Microsoft Windows

applications, such as Excel, Word and PowerPoint

- Corel WordPerfect

- Adobe Acrobat

- AutoCAD

- Multimedia applications,

like photos, audio/video clips and PowerPoint presentations.

OLE has certain disadvantages, as follows:

- Embedded objects increase

the host document file size, resulting in potential storage or loading

difficulties.

- Linked objects can break

when a host document is moved to a location that does not have the

original object application.

- Interoperability is

limited. If the embedded or linked object application is unavailable, the

object cannot be manipulated or edited.

Unit

2

Sound

is a pressure wave which is created by a vibrating object. This vibrations set

particles in the surrounding medium (typical air) in vibrational motion, thus

transporting energy through the medium. Since the particles are moving in

parallel direction to the wave movement, the sound wave is referred to as a

longitudinal wave. The result of longitudinal waves is the creation of

compressions and rarefactions within the air.

Sound

attributes:

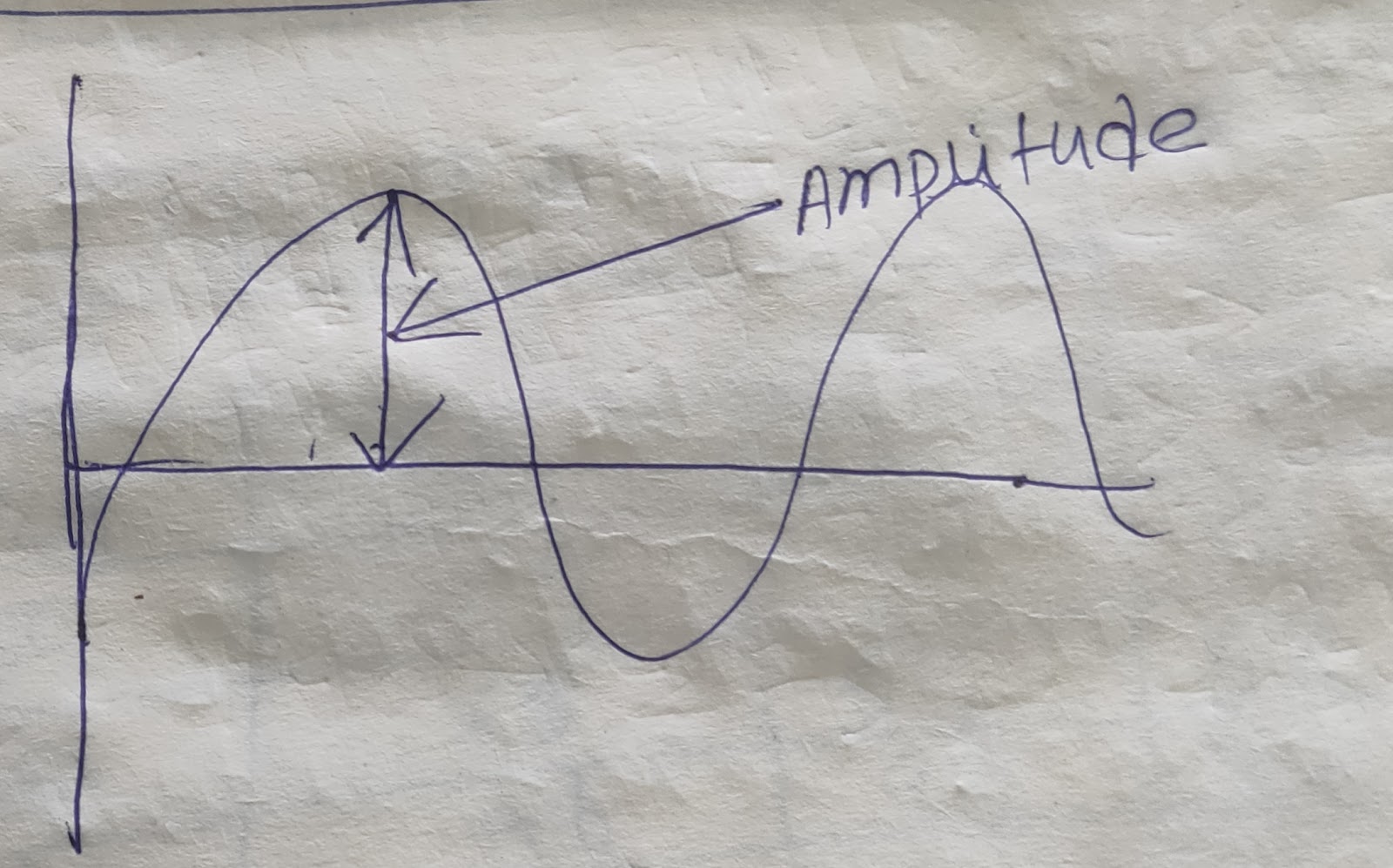

Amplitude:

The amplitude of the sound wave is measured of the height of the wave.

Amplitude define the sound loud or soft.

Frequency:

The total number of wave produced in one second is called the frequency

of the wave. si unit hertz(Hz)

speed:

The distance by which a compression or refraction of a wave travels per unit

time is called as a sound's speed. Si unit= meter/second.

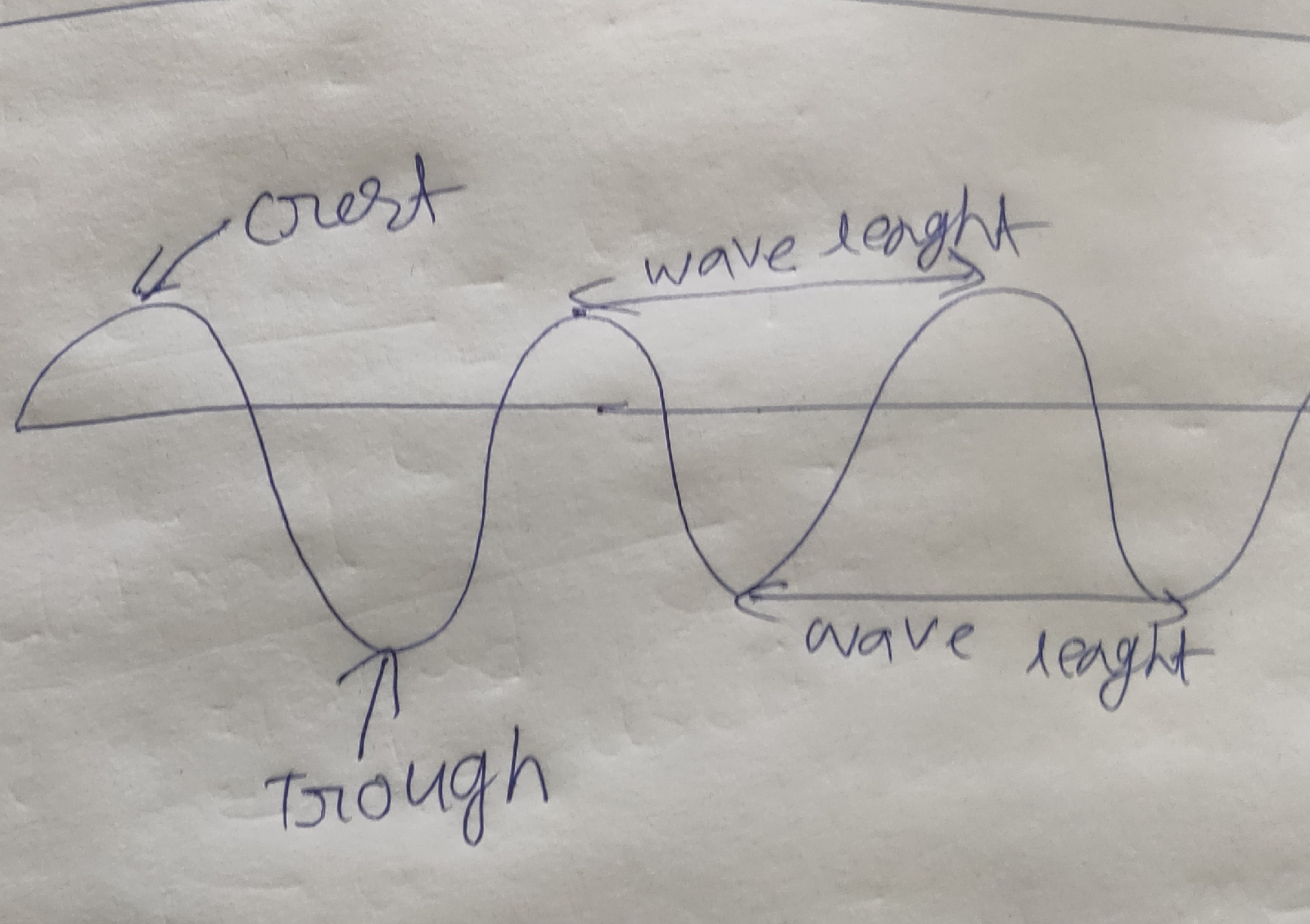

Crest:

It is the peek of curve.

Trough:

it is the crust of the curve

Wave

length: wave length can be defined as the distance between the two successive

crests or troughs of a wave.

Time

Period: The time taken for one complete oscillation trough a medium is called a

time period.

Pitch:

this depends of the frequency of the vibration of the wave. if the frequency of

the vibration of the wave is higher we say that the sound is shrill and a high

pitch. on the other hand if the sound is said to have a lower pitch then

it has lower frequency of vibration.

A sound effect (or audio

effect) is an artificially created or enhanced sound, or sound process used

to emphasize artistic or other content of films, television shows, live

performance, animation, video games, music, or other media.

Many

of our recorders have the ability to record on two separate channels, including

all SM2s, all SM3s, and the SM4 (non-ultrasonic). These channels are marked as

channel 0, or the left channel, and channel 1, or right. When you configure

these units to record in stereo,

the recordings it saves will contain two channels. If you listen to these files

in a conventional audio player, you will hear both channels simultaneously from

your left and right speakers. If you open the files in Kaleidoscope, you can

switch between viewing and hearing the left and right channels. Using

Kaleidoscope, you can also split these stereo files into two mono,

single-channel files.

Because

the Song Meters listed above can only record on two channels maximum, plugging

in an external microphone will override one of the internal microphones on an

SM3 or SM4. If you plug one SMM-A2 into channel 0 of an SM4, the left channel

will be recorded from that SMM-A2, and the right channel will be recorded from

the right-hand built-in microphone if the recorder is configured for stereo

recording.

This audio file size calculator will help you

estimate how much space an uncompressed audio file will take

up on your computer's storage.

bit rate = bit depth *

sample rate

What is a Sound Card?

Inside

the computer, a sound card is an expansion component that is also referred to

as a soundboard, audio output device, or audio card. It offers audio input and

output capabilities in computers, which can be heard with the help of speakers

or headphones. Although it is not necessary for the computer to have a sound

card, every machine includes it as either built into the motherboard (onboard)

or in an expansion slot. Through a device driver and a software application,

sound cards make capable of configuring and utilizing.

Usually,

an input device, a microphone, is attached to receive audio data, while

speakers or headphones are generally used to output audio data. Most headphones

use the size of 3.5 mm minijacks, which are the size of the connector. Through

an optical audio port like a Toslink connector or with the help of a standard

TRS (tip-ring-sleeve) connection, digital audio input and output are supported

by some sound cards. The conversion of incoming digital audio data into analog

audio is the primary function of a sound card through which speakers make

capable of playing sound. In the reverse case, from the microphone, the analog

audio data is converted into digital data by the sound card. These data can be

hold on the computer device as well as modified with the help of using audio

software.

While

many machines may contain an actual card that exists in a PCI slot, but the

sound card is also part of the motherboard in some computers. Also, you can

install a new sound card (professional sound card) if you really need to

enhance more audio capabilities to your computer. Professional sound cards may

have more inputs and outputs and have the capability of supporting advanced

sampling rates, like 192 kHz rather than 44.1 kHz. Instead of 3.5 mm, some

sound cards may include 1/4 in. connectors that accommodate most instrument

outputs.

Sound Card Description

A

sound card is a hardware in rectangular shape that contains different ports on

the side to connect audio devices, like a speaker, and also has multiple

contacts on the bottom of the card. As the motherboard, peripheral cards and

case are designed with compatibility in mind; therefore, at the time of

installing the sound card it just fits outside the back of the case. This makes

it capable of easily available for use. You also have an option with a sound

card to plug microphones, headphones, and also other audio devices into your

computer; because there are also USB sound cards available. Also, you

can plug it directly into a USB port with the help of a small

adapter.

Types of Sound Cards

Motherboard Sound Chips

The

sound cards were costly add-on cards when they were introduced for the first

time. Its cost was hundreds of dollars. When the computer sound technology

became available at a low price, miniaturization technology allowed computer

hardware manufacturers to produce sound into a single chip. In modern times,

there is a rare chance to find a computer not containing motherboard sound

chip. Even if they only contain a separate sound card. The motherboard sound

chips made sound card affordable for all computer owners. You can identify if

your system has a motherboard sound chip.

Standard Sound Cards

Inside

the computer, a standard sound card connects to one of the slots. Using a sound

card rather than motherboard sound chip, offer a benefit as it contains its own

processor chips. And, a motherboard sound chip produce sound on the basis of

the computer processor. When playing games, a standard sound card offers better

performance as it creates less of a load on the main processor.

External Sound Adapters

An

external sound adapter has all the same features like standard sound card. It

is a small box that enables connection to computer with the help of USB or

FireWire port, instead of an internal expansion slot. Sometimes, it contains a

feature that is not included by a standard sound card, such as physical volume

control knobs and extra inputs and outputs. As compared to the standard sound

card, it is much easier to move an external sound adapter to a new computer.

Also, with USB or FireWire expansion slots, it is the only way to upgrade the

sound of a laptop.

Uses of a sound card

The

primary use of a sound card is to provide sound that you hear from playing

music with varying formats and degrees of control. The source of the sound may

be in the form of streamed audio, a file, CD or DVD, etc. There

are many applications of a computer where a sound card can be used, which areas

are as follows:

- Games.

- Voice recognition.

- Watch movies.

- Creating and playing MIDI.

- Educational software.

- Audio and video conferencing.

- Business presentations.

- Record dictations.

- Audio CDs and listening to music.

- Sound Cards and Audio Quality

Instead of having a sound expansion cards, many

modern cards have the same technology integrated directly onto the motherboard.

These cards are known as on-board sound cards. But this configuration makes

slightly less powerful audio system and allows for a less expensive computer.

Almost, this way is appropriate for all computer users. Usually, dedicated

sound cards are necessary for the serious audio professional. To share a common

ground wire, since most of the desktop computers are set up for the front-facing

headphone jacks and ports. So, if you also have USB devices plugged in, you may

hear static in your headphones.

- WHAT IS FM SYNTHESIS?

FM synthesis is short for frequency modulation synthesis.

Simply put, FM synthesis uses one signal called, the “modulator” to

modulate the pitch of another signal, the “carrier”, that’s in the

same or a similar audio range.

This creates brand new frequency information in the resulting sound, changing

the timbre without the use of filters.

For clarity’s sake, “timbre” is the distinctive character of a sound. The

timbre of a sound depends upon its frequency content. The frequencies present

in a piano sound, for example, are different than those in a guitar sound, even

at the same pitch and level. This is what makes them sound different.

What Does Wavetable Synthesis Mean?

Wavetable synthesis is a method for generating

sounds from signals of a digital nature. The technique stores digital sound

samples from various sources, which can later be modified, enhanced or combined

for reproducing sounds. Wavetable synthesis is considered one of the oldest

methods for generating sounds from computers. It differs from simple PCM sample

playback because wavetable synthesis relies on looping over the buffer instead

of simply a “read once” method. However, wavetable synthesis is similar in many

ways to simple digital sine wave generation and digitally controlled oscillator

function. Wavetable synthesis is widely used in many areas such as in

production of sinusoidal signals.

What

is MIDI (Musical Instrument Digital Interface)?

Musical

Instrument Digital Interface (MIDI) is a standard to transmit and store music,

originally designed for digital music synthesizers. MIDI does not

transmit recorded sounds. Instead, it includes musical notes, timings and pitch

information, which the receiving device uses to play music from its own sound

library.

Before MIDI, digital piano keyboards, music

synthesizers and drum machines from different manufacturers could not talk to

each other.

MIDI was developed in the early 1980s to provide

interoperability between digital music devices. It was spearheaded by the

president of Roland instruments and developed with Sequential Circuits, an

early synthesizer company that Yamaha purchased in 1987. Other early adopters

included Yamaha, Korg, Kawai and Moog.

The first MIDI-compatible instruments were released

in 1983.

What Is An MP3 File?

An MP3 file is an audio

file that uses a compression algorithm to reduce the overall file size. It's

known as a "lossy" format because that compression is irreversible

and some of the source's original data is lost during the compression. It's

still possible to have fairly high quality MP3 music files, though. Compression

is a common technique for all types of files, whether it be audio, video, or

images to reduce the amount of storage they take up. While a 3-minute lossless

file, such as Waveform Audio file (WAV), can be around 30 MB in size,

the same file as a compressed MP3 would only be around 3 MB. That's a 90%

compression that retains near CD quality!

What is 3D Audio?

3D Audio is an umbrella term for a number of

immersive audio technologies that aim to surround the listener with

sound.

The goal is to reproduce audio in a way that

replicates the way we hear sound in the real world, especially when compared to

the mono and stereo experiences most have become accustomed to.

Sound Recording And Sound editing:

Audacity is a free and open-source digital audio

editor and recording application software, available for Windows, macOS, Linux,

and other Unix-like operating systems. As of December 6, 2022, Audacity is the

most popular download at FossHub, with over 114.2 million downloads since March

2015.

Advantages

1. Free to use for your projects.

2. Compatible with multiple operating systems

like Windows, Apple, and Linux.

3. A small-sized software package that requires

less storage space.

4. It is an open-source platform with strong

community backing, constantly striving for enhanced performance.

Features of Audacity

- Record live sound and audio playback on a PC.

- Convert music tapes and save them to MP3 o CD.

- Edit multiple audio formats such as MP2, MP3, AIFF, WAV, and FLAC.

- Take different sound documents and duplicate, cut, blend, or graft

them together.

- Change the pitch or speed of a sound recording.

- It can be used for various uses, from creating interviews, working

on voiceovers, editing music, or anything else involved with voice or

sound. If you are in a music band, you can use Audacity to release demos

of your songs. If you are new to exploring Audacity, you can begin by

searching for online tutorials about its usage. You will find plenty of

resources on YouTube and online forums about instructions on mastering

Audacity.

- Sound Forge is a digital audio editing suite by Magix Software

GmbH, which is aimed at the professional and semi-professional markets.

There are two versions of Sound Forge: Sound Forge Pro 12 released in

April 2018 and Sound Forge Audio Studio 13 released in January 2019

THE

IMPORTANCE OF VIDEO AND IMAGES

Video and images are incredibly important in

capturing audience attention and cannot be underestimated in our increasingly

visual world.

The ease in which we can produce, edit and

share images, video, graphics and words makes it simpler than ever to create

engaging content. The combination of images or video and an attention-grabbing

headline can stop viewers from moving on.

With the growth of social media platforms like

Facebook, Instagram, Tumblr and Pinterest, it is important to use images and

video for your company’s marketing and public relations strategies.

Pictures and video help to grab our attention

and can incentivise a viewer to continue through the article or video. Bright

colours capture our attention along with content which is funny, unusual,

provocative, surprising and/or eye-catching.

An image or video that makes the viewer want

to know more or find out what’s next is the best way to attract and maintain

attention.

Additionally, sometimes conveying a complex

message can be difficult. A larger image with a mix of text and smaller visuals

(also known as an infographic) can help explain these tough concepts without

taking up too much space (see image).

On social media platforms, quality attention

grabbing images can keep a viewer engaged. It is better to steer clear of

common stock photography and use high quality unique images. Images and

graphics can help significantly in sharing and views.

Beautiful images are important for your

audience but having accurate photo descriptions is also useful for better

search engine optimisation. By having accurate captions, you can take advantage

of the search engine traffic related to your image.

With high levels of phone use, it is also

useful to check how images will appear on phones or tablets, to ensure the

image is correctly displayed. Make sure you resize the image if needed in order

to better suit the platform you are using.

Simple images and video are an effective

choice, and conceptual or artistic content should be used when you wish to

provoke an emotional response.

Raster images use bit maps to store information. This means a large file

needs a large bitmap. The larger the image, the more disk space the image file

will take up. As an example, a 640 x 480 image requires information to be

stored for 307,200 pixels, while a 3072 x 2048 image (from a 6.3 Megapixel

digital camera) needs to store information for a whopping 6,291,456 pixels. We

use algorithms that compress images to help reduce these file sizes. Image

formats like jpeg and gif are common compressed image formats. Scaling down

these images is easy but enlarging a bitmap makes it pixelated or simply

blurred. Hence for images that need to scale to different sizes, we use vector

graphics.

File extensions: .BMP, .TIF, .GIF, .JPG

Making use of sequential commands or mathematical statements or programs

which place lines or shapes in a 2-D or 3-D environment is referred to as

Vector Graphics. Vector graphics are best for printing since it is composed of

a series of mathematical curves. As a result vector graphics print crisply even

when they are enlarged. In physics: A vector is something that has a magnitude

and direction. In vector graphics, the file is created and saved as a sequence

of vector statements. Rather than having a bit in the file for each bit of line

drawing, we use commands which describe a series of points to be connected. As

a result, a much smaller file is obtained.

File extensions: SVG, EPS, PDF, AI, DXF

Difference between regular

graphics and interlaced graphics

The main difference between regular graphics and interlaced graphics is the way they are displayed on a screen. Regular graphics, also known as non-interlaced graphics, are displayed on a screen by drawing every line of the image in sequence from top to bottom. This produces a smooth, flicker-free image that is easy to view. In contrast, interlaced graphics are displayed by drawing every other line of the image in sequence from top to bottom. This produces an image that appears to flicker, and it can be harder to view for some people. Interlacing is often used in television and video displays to reduce the amount of data that needs to be transmitted, but it is less common in computer displays.

Capturing images can be done through various

methods, each employing different technologies and mechanisms. Here are some

common image capturing methods:

1. Digital Cameras:

- Compact Digital Cameras: These

are small, portable cameras with built-in lenses.

- Digital Single-Lens Reflex

(DSLR) Cameras: Higher-end cameras with interchangeable lenses and

advanced features.

- Mirrorless Cameras: Similar

to DSLRs but without the mirror mechanism, making them more compact.

2. Smartphones:

- Built-in Cameras: Most

smartphones are equipped with built-in cameras that capture images and videos.

- Multiple Lenses: Some

smartphones have multiple lenses for different purposes, such as wide-angle or

telephoto.

3. Webcams:

- Integrated Webcams: Built

into laptops, monitors, or external devices for video conferencing and simple

image capture.

4. Action Cameras:

- Designed for Action Shots: Compact

and rugged cameras designed for capturing action and adventure activities.

5. Drones:

- Equipped Cameras: Drones

often have built-in cameras for capturing aerial images and videos.

6. CCTV Cameras:

- Closed-Circuit Television

Cameras: Used for surveillance and security,

capturing images in specific locations.

7. Scanner:

- Flatbed Scanners: Used

for scanning physical documents or images into digital formats.

8. Satellite Imagery:

- Satellite Cameras: Capture

images of Earth from space for various purposes, including mapping and

environmental monitoring.

9. Medical Imaging Devices:

- X-ray Machines, MRI, CT

Scanners: Used in the medical field for capturing

internal images of the human body.

10. Digital Image Sensors:

- CCD (Charge-Coupled Device)

and CMOS (Complementary Metal-Oxide-Semiconductor) Sensors: Found

in various cameras, converting light into digital signals.

11. Thermal Imaging Cameras:

- Infrared Cameras: Capture

heat signatures rather than visible light, used in applications like night

vision and industrial inspections.

12. 3D Scanning:

- Structured Light Scanning,

Laser Scanning: Capture three-dimensional information of

objects or environments.

13. Motion Cameras:

- High-Speed Cameras: Capture

fast-moving events with high frame rates for slow-motion playback.

14. Pinhole Cameras:

- Basic Optical Devices:

Use a small hole to project an inverted image onto a surface, often used for

educational purposes.

15. Hybrid Imaging Devices:

- Combination of

Technologies: Some devices combine different imaging

technologies for specific applications.

These methods vary in terms of purpose,

technology, and application, catering to a wide range of needs from personal

photography to industrial and scientific imaging.

RGB stands for Red, Green, Blue, and it refers to a color model in which colors are represented as combinations of these three primary colors. In the RGB color model, colors are created by varying the intensity of each of the three primary colors. Each color is represented by a set of three values, usually in the range of 0 to 255, indicating the intensity of red, green, and blue.

For example, the color black is represented as (0, 0, 0), indicating zero intensity for red, green, and blue. White is represented as (255, 255, 255), indicating maximum intensity for all three colors. Other colors are created by mixing different intensities of red, green, and blue.

The

RGB color model is widely used in electronic displays, such as computer

monitors, television screens, digital cameras, and other devices that produce

colored light. It is an additive color model, meaning that colors are created

by adding different intensities of light.

CMYK

stands for Cyan, Magenta, Yellow, and Key (black). It is a subtractive color

model used in color printing. Unlike the RGB color model, which is additive and

used in electronic displays, the CMYK model is subtractive and is used in the

printing process.

In

CMYK, colors are created by subtracting varying percentages of light-absorbing

inks—cyan, magenta, yellow, and black—from a white background. Each color is

represented by a set of four values, typically expressed as percentages. Here's

a brief overview of each ink's role:

1.

Cyan (C):Absorbs red light.

2.

Magenta (M): Absorbs green light.

3.

Yellow (Y): Absorbs blue light.

4.

Key (K): Represents black, used for detail and to improve color depth. It is

labeled "K" to avoid confusion with "B" for blue.

By

combining different percentages of these inks, a wide range of colors can be

achieved for printing purposes. CMYK is commonly used in color printing for

items such as magazines, brochures, posters, and other printed materials. It's

important to note that the CMYK color space is not as expansive as the RGB

color space, so there are some colors that can be represented in RGB but are

challenging to reproduce accurately in CMYK. This is a consideration when

designing graphics or images for both digital and print media.

1.

Hue:

- Hue is the type of color, often described as the name of the color

itself (e.g., red, green, blue).

- It represents where a color falls on the color wheel.

2.

Saturation:

- Saturation refers to the intensity or vividness of a color.

- A highly saturated color is vivid and intense, while a desaturated

color is more muted or grayscale.

3.

Brightness (or Value):

- Brightness, also known as value or lightness, refers to how light or

dark a color is.

- It is the measure of the amount of black or white mixed with a color.

These

three components—hue, saturation, and brightness—are often used together to

describe and manipulate colors in various color models. One common color model

that incorporates these concepts is the HSB/HSV model, where:

-

H (Hue) is represented as an angle around a color wheel.

-

S (Saturation) is the intensity or purity of the color.

-

B (Brightness) represents the lightness or darkness of the color.

It's

important to note that different color models may use slightly different terms

or representations, but the fundamental concepts of hue, saturation, and

brightness are widely used in the study and application of color.

When

referring to the attributes of image size, several key characteristics come

into play. These attributes are essential for describing the dimensions and

resolution of an image. Here are some common attributes associated with image

size:

1.

Width and Height:

- The width and height of an image represent the dimensions of the image

in pixels or other units. For example, an image with dimensions 800 x 600

pixels has a width of 800 pixels and a height of 600 pixels.

2.

Resolution:

- Resolution refers to the amount of detail in an image and is often

measured in pixels per inch (PPI) or dots per inch (DPI). Higher resolution

generally means more detail, but it also results in larger file sizes. Common

resolutions for web images are 72 or 96 PPI, while print images may require

higher resolutions, such as 300 DPI.

3.

Aspect Ratio:

- The aspect ratio is the proportional relationship between the width and

height of an image. Common aspect ratios include 4:3 (standard television),

16:9 (widescreen television), and 3:2 (common in photography).

4.

File Size:

- The file size is the amount of digital storage space an image occupies.

It is measured in bytes, kilobytes (KB), megabytes (MB), or gigabytes (GB).

File size is influenced by factors such as image dimensions, color depth, and

compression.

5.

Color Depth:

- Color depth, also known as bit depth, determines the number of colors

that can be represented in an image. Common color depths include 8-bit (256

colors), 24-bit (16.7 million colors), and 32-bit (with an additional alpha

channel for transparency).

6.

File Format:

- The file format specifies how image data is stored. Common image file

formats include JPEG, PNG, GIF, and TIFF. Each format has its own compression

methods, transparency support, and other features.

7.

Pixel Count:

- The total number of pixels in an image is referred to as the pixel

count. It is calculated by multiplying the width and height of the image. For

example, an image with dimensions 1200 x 800 pixels has a pixel count of

960,000 pixels.

Understanding

these attributes is crucial when working with images, whether for digital

media, print, or other applications. Adjusting these parameters can impact the

visual quality, file size, and suitability of an image for specific purposes.

Image Format describes how data related to the image will be stored. Data can

be stored in compressed, Uncompressed, or vector format. Each format of the

image has a different advantage and disadvantage. Image types such as TIFF are

good for printing while JPG or PNG, are best for the web.

·

TIFF(.tif, .tiff) Tagged Image File Format

this format store image data without losing any data. It does not perform any

compression on images, and a high-quality image is obtained but the size of the

image is also large, which is good for printing, and professional printing.

·

JPEG (.jpg, .jpeg) Joint Photographic

Experts Group is a loss-prone (lossy) format in which data is lost to reduce

the size of the image. Due to compression, some data is lost but that loss is

very less. It is a very common format and is good for digital cameras, nonprofessional

prints, E-Mail, Powerpoint, etc., making it ideal for web use.

·

GIF (.gif) GIF or Graphics

Interchange Format files are used for web graphics. They can be animated and

are limited to only 256 colors, which can allow for transparency. GIF files are

typically small in size and are portable.

·

PNG (.png) PNG or Portable Network

Graphics files are a lossless image format. It was designed to replace gif

format as gif supported 256 colors unlike PNG which support 16 million colors.

·

Bitmap (.bmp) Bit Map Image file is

developed by Microsoft for windows. It is same as TIFF due to lossless, no

compression property. Due to BMP being a proprietary format, it is generally

recommended to use TIFF files.

·

EPS (.eps) Encapsulated PostScript

file is a common vector file type. EPS files can be opened in applications such

as Adobe Illustrator or CorelDRAW.

·

RAW Image Files (.raw, .cr2, .nef, .orf, .sr2) These Files are

unprocessed and created by a camera or scanner. Many digital SLR cameras can

shoot in RAW, whether it be a .raw, .cr2, or .nef. These images are the

equivalent of a digital negative, meaning that they hold a lot of image

information. These images need to be processed in an editor such as Adobe

Photoshop or Lightroom. It saves metadata and is used for photography.

What is a DIB file?

A Device-Independent bitmap (DIB) is a raster

image file that is similar in structure to the standard Bitmap

files(BMP/image/bmp/)). It contains a color table that describes the mapping of

RGB colors to the pixel values. This enables DIB to represent image on any

device. It can be opened with almost all applications that can open a standard

BMP file on Windows as well as macOS. DIB are binary files and have a complex

file format similar to BMP. DIB images are independent of the output

capabilities of rendering devices in terms of color depth and pixel-per-inch.

What is in a CIF file?

Crystallographic Information

File (CIF) is a standard text file format for

representing crystallographic information, promulgated by the

International Union of Crystallography (IUCr).

What is a PIC file?

Bitmap image created by IBM

Lotus or a variety of other applications; possible programs include Advanced

Art Studio, Micrograft Draw, and Softimage 3D; should not be confused with

the .PICT format.

What is image masking?

Image masking is an

extremely useful technique to edit your images in a non-destructive way. With

image masking, you can “conceal and reveal,” meaning you can hide portions of

your image and display other portions, allowing you much more flexibility in how

you edit your images. So, here are a few types of image masking techniques and

some use cases to inspire your editing workflow.

UNIT 3

What are examples of video

files?

Over

the history of computers, many different video file formats were used for

videos. Below lists popular types of video file formats.

- .MP4

- .WMV

- .AVI

- .MOV

- .3GP

- .WebM

- .FLV

Analog video refers to the transmission or storage

of video signals in an analog format, where the information is represented by

continuously varying electrical voltages or other analog signals. This is in

contrast to digital video, where information is represented in discrete,

digital form as a series of ones and zeros.

Analog video was the standard for many years before

the widespread adoption of digital technology. In the context of television,

analog video signals were used for over-the-air broadcasts and cable

transmissions. Analog video signals can also be found in older video recording

formats such as VHS (Video Home System) tapes and analog camcorder tapes.

The key characteristics of analog video include:

1. Continuous Signal: Analog video signals are

continuous and vary smoothly over time. Changes in the signal correspond

directly to changes in the visual content.

2. Signal Quality: Analog signals may degrade over

long distances or suffer from interference, resulting in a decrease in signal

quality. This degradation can manifest as visual artifacts such as snow or

ghosting.

3. Resolution: Analog video systems have a limited

resolution compared to modern digital video. Standard analog television, for

example, had lower resolution compared to high-definition digital television

Digital video refers to the representation, transmission,

and storage of video signals in a digital format. In this context,

"digital" refers to the use of discrete, binary code—combinations of

ones and zeros—to represent visual information. Digital video has become the

standard in modern technology, offering several advantages over analog video.

Here are some key characteristics of digital video:

1. Discrete Representation: Digital video

represents visual information as a series of discrete data points, typically in

the form of pixels. Each pixel is assigned a specific digital value, which

collectively forms the image.

2. Compression: Digital video often uses

compression algorithms to reduce file sizes without significantly sacrificing

quality. Common video compression standards include MPEG (Moving Picture

Experts Group) formats.

3. Resolution: Digital video can achieve higher

resolutions compared to analog video, providing clearer and more detailed

images. Resolutions are often expressed in terms of pixels (e.g., 1920x1080 for

Full HD).

4. Flexibility: Digital video allows for easy

editing, manipulation, and enhancement of content using software tools. It also

supports various multimedia elements, such as text, graphics, and audio, within

the same digital file.

5. Storage and Reproduction: Digital video can be

stored on various digital media (hard drives, solid-state drives, optical

discs) and easily copied or transferred without loss of quality. Digital video

files can also be streamed over networks.

6. Transmission: Digital video is commonly used in

broadcasting, cable television, and online streaming services. It enables

efficient transmission over digital communication channels.

7. Signal Quality: Unlike analog signals that may

degrade over long distances, digital signals can be transmitted over long

distances without loss of quality, assuming appropriate error correction

measures are in place.

Popular digital video formats include AVI, MP4,

MOV, and MKV, among others. Digital video has become the standard in consumer

electronics, including televisions, cameras, smartphones, and streaming

services, as well as in professional video production and broadcasting. The

transition to digital video has significantly improved the quality,

accessibility, and versatility of visual media.

What is NTSC?

Definition

NTSC is the color television standard established

by the National Television Standards Committee in the United States in 1953.

The NTSC standard's distinguishing feature was that it added color to the

original 1941 black and white television standard in such a way that black and

white TVs continued to work.

Another distinguishing characteristic was that

NTSC's dependency on accurate phase meant that it was difficult to maintain the

color as the signal was transmitted and processed. Television engineers often

joke that NTSC stands for "Never Twice the Same Color."

The NTSC standard adds a color subcarrier which is

quadrature-modulated by two color-difference signals and added to the luminance

signal. The genius of the system is that black and white TVs ignore the color

components, which are beyond the black and white signal's bandwidth.

The NTSC color subcarrier reference is 3.579545MHz.

The horizontal sync rate (H) was adjusted slightly from the black and white

standard's 15.750kHz such that the color subcarrier is 455/2 times H. The

vertical rate is Fv = Fh x 2/525.

What is PAL?

Definition

Phase alternate line: A television standard used in

most of Europe.

Similar to NTSC, but uses subcarrier phase

alternation to reduce the sensitivity to phase errors that would be displayed

as color errors. Commonly used with 626-line, 50Hz scanning systems, with a

subcarrier frequency of 4.43362MHz.

HDTV

What is an HDTV?

Definition

High-definition television (HDTV) is an all-digital

system for transmitting a TV signal with far greater resolution than the analog

standards (PAL, NTSC, and SECAM).

A high-definition television set can display

several resolutions, (up to two million pixels versus a common television set's

360,000). HDTV offers other advantages such as greatly improved color encoding

and the loss-free reproduction inherent in digital technologies.

Synonyms

High-Definition Television

Video capturing involves

recording visual information using various tools and devices. Here's a brief

overview of some key aspects related to video capturing:

1.Cameras: - Traditional Cameras: Digital cameras and DSLRs are

commonly used for high-quality video capture. They offer manual control over

settings like aperture, shutter speed, and ISO. - Camcorders:

Specifically designed for video recording, camcorders are portable and often

come with built-in microphones. - Action Cameras: Compact and durable,

these cameras are suitable for capturing dynamic footage in extreme conditions.

2.Smartphones and Tablets: - Modern smartphones and tablets often

have high-quality cameras capable of recording high-definition videos. There

are also various apps available for enhancing video capture on mobile devices.

3.Webcams: - Built-in or external webcams are commonly used for

video conferencing, streaming, and creating online content.

4.Accessories: - Tripods: Essential for stable shots, tripods come

in various sizes and styles. - Microphones: External microphones

can improve audio quality significantly. - Lighting: Good lighting

is crucial for high-quality video. You may use natural light, studio lights, or

portable LED lights. - Stabilizers and Gimbals: These devices help

reduce shake and ensure smooth footage, especially during handheld shooting.

5.Capture Cards - For professional video capture from external

sources (like cameras) to a computer, capture cards are often used.

6. Software - Video Editing Software: After capturing video,

editing software like Adobe Premiere Pro, Final Cut Pro, or DaVinci Resolve can

be used for post-production.

7. Streaming Devices - Devices like the Elgato HD60 S are used for

capturing and streaming gameplay or other content from gaming consoles.

8. Storage - Adequate storage, such as external hard drives, is

crucial for storing large video files.

9. Media Formats - Different devices and platforms may require

specific video formats. Common formats include MP4, AVI, MOV, and MKV.

When capturing video, consider factors like resolution, frame rate, and

lighting to ensure the best results. Whether you're creating content for

personal use, social media, or professional projects, the right combination of

equipment and techniques can make a significant difference in the quality of

your videos.

Video capturing involves various techniques to ensure high-quality and visually

appealing footage. Here are some key techniques to consider:

1. Stable Shots - Use a tripod or stabilizer to avoid shaky

footage. Smooth and stable shots contribute to a more professional look.

2. Framing and Composition - Follow the rule of thirds for balanced

composition. Place key elements along the intersections of the grid

lines. - Consider the foreground, midground, and background to add

depth to your shots. - Experiment with different angles and

perspectives to make your footage visually interesting.

3. Focus - Ensure proper focus on the subject. Most cameras and

smartphones have autofocus, but manual focus can provide more control.

- Use focus tracking when capturing moving subjects.

4. Exposure - Adjust exposure settings (aperture, shutter speed,

ISO) based on the lighting conditions. Avoid overexposed or underexposed

footage. - Use exposure lock or manual mode for better control.

5. White Balance - Set the white balance according to the lighting

conditions to avoid color casts. Automatic white balance may not always be

accurate.

6. Frame Rate - Choose an appropriate frame rate for your project.

Common frame rates include 24fps for a cinematic look, 30fps for standard

video, and higher frame rates for smooth motion.

7. Panning and Tilting - Practice smooth panning and tilting

movements for dynamic shots. Use a tripod or a fluid head for controlled

motion. - Avoid abrupt movements that can be distracting.

8. Zooming - Use zoom sparingly. Consider physically moving closer

to the subject instead of relying on digital zoom, which can reduce image

quality.

9. Lighting - Natural light is often preferable, but if using

artificial lighting, diffuse it to avoid harsh shadows. - Consider

the direction of light for more flattering results.

10. Audio Quality - Good audio is essential for video. Use an

external microphone for better sound quality, especially in noisy

environments. - Monitor audio levels to prevent distortion.

11. Slow Motion and Time-Lapse - Experiment with slow-motion and

time-lapse techniques to add creativity to your videos.

12. Planning and Storyboarding - Plan your shots in advance.

Storyboarding can help visualize the sequence of shots for a more cohesive

narrative. - Be mindful of continuity between shots.

13. Post-Processing - Use video editing software for

post-processing. This includes color correction, cutting unnecessary footage,

adding transitions, and incorporating music or sound effects.

By combining these techniques and experimenting with different approaches, you

can enhance the overall quality and impact of your video content. Practice and

continuous learning are key to improving your video capturing skills.

Audio Video Interleave, known by its acronym AVI, is a multimedia container format introduced by Microsoft in November

1992 as part of its Video for Windows technology. AVI files can contain both

audio and video data in a file container that allows synchronous

audio-with-video playback. Like the DVD video format, AVI files support

multiple streaming audio and video, although these features are seldom used.

Most AVI files also use the file format extensions developed by the Matrox

OpenDML group in February 1996. These files are supported by Microsoft, and are

unofficially called "AVI 2.0".

What Is an MPEG File?

Developed by the Moving Picture Experts Group,

the same people that brought you such formats as MP3 and MP4,

MPEG is a video file format that uses either MPEG-1 or MPEG-2 file compression

depending on how it will be used.

Editing a video involves manipulating and arranging

video clips to create a final product. Here's a general guide on how to edit a

video:

1. Choose Your Editing Software:

- Select a video editing software that

suits your needs and skill level. Some popular options include Adobe Premiere

Pro, Final Cut Pro, iMovie, DaVinci Resolve, and HitFilm Express. Choose one

that fits your budget and requirements.

2. Import Your Footage

- Open your chosen video editing

software and import the video clips you want to edit. This is usually done by

selecting "Import" or "Import Media" and navigating to the

location of your video files.

3. Organize Your Clips

- Create a project and organize your

clips in the order you want them to appear. You can usually do this by dragging

and dropping clips onto a timeline.

4. Trim and Cut Clips

- Use the cutting tool to trim and cut

your clips. Remove any unnecessary or unwanted parts. This is often done by

setting in and out points and deleting the selected portion.

5. Add Transitions

- To make your video flow smoothly,

you can add transitions between clips. Common transitions include fades,

dissolves, wipes, and slides. Drag and drop these transitions between clips on

the timeline.

6. Add Music and Sound

- If you want background music or

other audio elements, import your audio files and place them on a separate

audio track. Adjust the volume levels as needed.

7. Add Text and Graphics

- Include titles, captions, and

graphics as necessary. Most video editing software provides tools to add text

overlays or graphics. You can customize the font, size, color, and position.

8. Apply Effects and Filters

- Enhance your video with effects and

filters. Adjust color correction, brightness, contrast, and apply any creative

effects you desire. Many video editing programs offer a variety of built-in

effects.

9. Review and Preview

- Regularly preview your video to

check the flow, transitions, and overall quality. Make adjustments as needed.

10. Export Your Video

- Once you're satisfied with your

edits, export your video in the desired format. Choose settings such as

resolution, frame rate, and compression options. Different platforms may have

specific requirements for video uploads.

11. Save Your Project

- Save your project file in the

native format of your video editing software. This allows you to make future

edits without losing your work.

Remember that the specifics of these steps may vary

depending on the software you're using, so refer to the user guide or help

documentation provided by the software for more detailed instructions.

Creating a movie involves various tools for

different stages of the filmmaking process, from pre-production to

post-production. Here are some commonly used tools for movie making:

1. Screenwriting

- Final Draft, Celtx, and WriterDuet:

These are popular screenwriting software tools that help writers format scripts

according to industry standards.

2. Pre-Production

- ShotPro: This app allows filmmakers

to create storyboards and animatics, helping with shot planning.

- StudioBinder and Celtx: These tools

help with production scheduling, script breakdowns, and collaboration among the

production team.

3. Camera and Filming

- Cameras: Depending on your budget,

you might use professional cameras like those from RED or ARRI, or more

consumer-friendly options like the Canon EOS series or the Sony Alpha series.

- Tripods and Stabilizers: Tools like

the DJI Ronin or Zhiyun Crane can stabilize shots, and tripods provide a stable

base for stationary shots.

4. Lighting:

- Aputure, Godox, and Arri Lights:

These brands offer a variety of lighting solutions for different setups and

budgets.

5. Sound Recording:

- Zoom H6, Rode NTG series, and

Sennheiser microphones: These tools are commonly used for high-quality audio

recording on set.

6. Editing:

- Adobe Premiere Pro, Final Cut Pro,

DaVinci Resolve: Professional video editing software for cutting and arranging

video clips, adding effects, and refining the final product.

7. Visual Effects (VFX) and Animation:

- Adobe After Effects, Blender: After

Effects is widely used for motion graphics and visual effects. Blender is a

free and open-source 3D creation suite that includes a powerful video editor.

8. Color Grading:

- DaVinci Resolve, Adobe SpeedGrade:

These tools allow you to adjust the color and tone of your footage to achieve a

desired look.

9. Audio Editing:

- Adobe Audition, Audacity: Tools for

refining and editing audio tracks, including dialogue, music, and sound

effects.

10. Music and Sound Effects:

- Artlist, Epidemic Sound,

Soundstripe: These are platforms where you can find licensed music and sound

effects for your film.

11. Distribution and Screening:

- FilmFreeway: A platform for

submitting films to festivals.

- Vimeo, YouTube: Platforms for

sharing and distributing your finished film.

Remember that the choice of tools can depend on

your specific needs, budget, and the scale of your project. Always check for

the latest versions and reviews to ensure compatibility and functionality.

UNIT 4

What is animation?

Animation is a

method of photographing successive drawings, models, or even puppets, to create

an illusion of movement in a sequence. Because our eyes can only retain an

image for approximately 1/10 of a second, when multiple images appear in fast succession,

the brain blends them into a single moving image.

In traditional animation,

pictures are drawn or painted on transparent celluloid sheets to be

photographed. Early cartoons are examples of this, but today, most animated

movies are made with computer-generated imagery or CGI.

To create the appearance

of smooth motion from these drawn, painted, or computer-generated images, frame

rate, or the number of consecutive images that are displayed each second, is

considered. Moving characters are usually shot “on twos” which just means one

image is shown for two frames, totaling in at 12 drawings per second. 12 frames

per second allows for motion but may look choppy. In the film, a frame rate of

24 frames per second is often used for smooth motion.

Different Types of Animation:

·

Traditional Animation

·

Rotoscoping

·

Anime

·

Cutout

·

3D Animation

·

Stop Motion

·

Motion graphics

1. Squash and Stretch.

Arguably the most fundamental of the 12 principles

of animation. Squash and stretch is applied to give a sense of weight and/or

flexibility to objects or even to people. Animate a simple object like a

bouncing ball - as it hits the ground, you can squash the ball flat and widen

it.

Although exaggerated, this animation is grounded in

reality, because it creates the illusion of the ball being distorted by an

outside force - just like in real life.

You can apply squash and stretch to more realistic animation, too. But

keep in mind the object’s volume. If the length of the ball is vertically

stretched, its width must contract horizontally.

2. Anticipation.

Use anticipation to add some realism when you want

to prepare your audience for some action. Consider what people do when they

prepare to do something. A footballer about to take a penalty would steady

themselves with their arms or swing their foot back ready to kick. If a golfer

wants to hit a golf ball, they must swing their arms back first.

Anticipation doesn’t just have to apply to sporty actions. Focus on an

object a character may be about to pick up or have a character anticipating

somebody’s arrival on screen.

3. Staging.

You want your audience’s attention to be on the important elements of

the story you’re telling and avoid distracting them with unnecessary detail.

With a combination of lighting, framing and composition, plus ensuring that you

remove clutter, you’ll be able to effectively advance your story.

4. Straight Ahead Action and Pose to Pose.

Straight Ahead and Pose to Pose are in a sense

two principles in one, each concerning different approaches to drawing.

Straight ahead action scenes involve animating each frame from beginning to

end. Do this to create a fluid illusion of movement for action scenes, but not

if you want to create exact poses with proportions maintained.

With pose to pose, animators start by drawing key

frames and they fill in the intervals later. Because relation to surroundings

and composition become more important, this approach is preferable for

emotional, dramatic scenes. As Disney films often involve dramatic and action

scenes, their animators would often adopt both approaches.

With computer animation, the problems of straight-ahead action are

removed as computers can remove the potential proportion issue. They can also

fill in the missing sequences in pose to pose.

5. Follow Through and Overlapping Action.

These two movement-based principles combine to make

movement in animation more realistic and create the impression characters are

following the laws of physics.

Follow through concerns the parts of the body that

continue to move when a character stops. The parts then pull back towards the

centre of mass, just like with a real person. Follow through also applies to

objects.

Parts of the body don’t move at the same rate and overlapping action

demonstrates this. For example, you could have a character’s hair moving during

the momentum of action and when the action is over, it continues to move a

fraction longer than the rest of the character.

6. Ease In, Ease Out.

This animation principle is also known as ‘slow in

and slow out’. In the real world, objects have to accelerate as they start

moving and slow down before stopping. For example, a person running, a car

on the road or a pendulum.

To represent this in animation, more frames must be drawn at the

beginning and end in an action sequence. Ease in, ease out adds more realism to

your animation and will help the audience identify and sympathise with your

characters.

Fascinated by the world of animation?

Keep learning by reading our beginner's guide to

animation.

7. Arcs.

In real life, most actions have an arched

trajectory. To achieve greater realism, animators should follow this principle.

Whether you’re creating the effect of limbs moving or an object thrown into the

air, movements that follow natural arcs will create fluidity and avoid

unnatural, erratic animation.

To keep arcs in mind, traditional animators often

draw them lightly on paper to use as reference and to erase when they’re no

longer needed. Speed and timing are important with arcs, as sometimes they

happen so quickly that they blur to the point they’re unrecognizable.

Of course, this is sometimes done deliberately, to give the impression

of something unrealistically or amusingly fast. This is known as an animation

smear. Chuck Jones, one of the greatest animators of the 20th century, was an

expert at these. He was behind one of the first examples in a short for Warner

Bros in 1942. Jones only used it to save time, but liked it and would return to

the trope for many animations in the Looney Tunes series. It's still used today

in The Simpsons.

8. Secondary Action.

This principle of animation helps emphasize the

main action within a scene by adding an extra dimension to your characters and

objects. Subtleties, such as the way a person swings their arms while walking

down the street, give colour to your creations and make them appear more human.