Quick Revision for operating system - vikatu

UNIT I

Operating System lies in the category of system software. It basically manages all the resources of the computer. An operating system acts as an interface between the software and different parts of the computer or the computer hardware. The operating system is designed in such a way that it can manage the overall resources and operations of the computer.

Operating System is a fully integrated set of specialized programs that handle all the operations of the computer. It controls and monitors the execution of all other programs that reside in the computer, which also includes application programs and other system software of the computer. Examples of Operating Systems are Windows, Linux, Mac OS, etc.

An Operating System (OS) is a collection of software that manages computer hardware resources and provides common services for computer programs. The operating system is the most important type of system software in a computer system.m.

There is multiple type of operating system available in the market.

- single process operating system (Ms docs)

- batch processing operating system(ATLAS)

- multiprogramming operating system(THE)

- multi tasking operating system(CTSS)

- multi processing operating system(WINDOWS NT)

- Distributed operating system(LOCUS)

- Real time OS(ATCS)

- single process operating system (Ms docs)

- Batch processing operating system(ATLAS)

- Multiprogramming operating system(THE):

- Multi tasking operating system(CTSS)

- multi processing operating system(WINDOWS NT)

- Distributed operating system(LOCUS):

- Real time OS(ATCS)

The components of an operating system play a key role to make a variety of computer system parts work together. There are the following components of an operating system, such as:

- Process Management

- File Management

- Network Management

- Main Memory Management

- Secondary Storage Management

- I/O Device Management

- Security Management

- Command Interpreter System

Process Management

- The process management component is a procedure for managing many processes running simultaneously on the operating system. Every running software application program has one or more processes associated with them.

- For example, when you use a search engine like Chrome, there is a process running for that browser program.

- Process management keeps processes running efficiently. It also uses memory allocated to them and shutting them down when needed.

- The execution of a process must be sequential so, at least one instruction should be executed on behalf of the process.

Functions of process management

Here are the following functions of process management in the operating system, such as:

- Process creation and deletion.

- Suspension and resumption.

- Synchronization process

- Communication process

File Management

A file is a set of related information defined by its creator. It commonly represents programs (both source and object forms) and data. Data files can be alphabetic, numeric, or alphanumeric.

Function of file management

The operating system has the following important activities in connection with file management:

- File and directory creation and deletion.

- For manipulating files and directories.

- Mapping files onto secondary storage.

- Backup files on stable storage media.

Network Management

Network management is the process of administering and managing computer networks. It includes performance management, provisioning of networks, fault analysis, and maintaining the quality of service.

A distributed system is a collection of computers or processors that never share their memory and clock. In this type of system, all the processors have their local memory, and the processors communicate with each other using different communication cables, such as fibre optics or telephone lines.

The computers in the network are connected through a communication network, which can configure in many different ways. The network can fully or partially connect in network management, which helps users design routing and connection strategies that overcome connection and security issues.

Functions of Network management

Network management provides the following functions, such as:

- Distributed systems help you to various computing resources in size and function. They may involve minicomputers, microprocessors, and many general-purpose computer systems.

- A distributed system also offers the user access to the various resources the network shares.

- It helps to access shared resources that help computation to speed up or offers data availability and reliability.

Main Memory management

Main memory is a large array of storage or bytes, which has an address. The memory management process is conducted by using a sequence of reads or writes of specific memory addresses.

Functions of Memory management

An Operating System performs the following functions for Memory Management in the operating system:

- It helps you to keep track of primary memory.

- Determine what part of it are in use by whom, what part is not in use.

- In a multiprogramming system, the OS decides which process will get memory and how much.

- Allocates the memory when a process requests.

- It also de-allocates the memory when a process no longer requires or has been terminated.

Secondary-Storage Management

The most important task of a computer system is to execute programs. These programs help you to access the data from the main memory during execution. This memory of the computer is very small to store all data and programs permanently. The computer system offers secondary storage to back up the main memory.

Today modern computers use hard drives/SSD as the primary storage of both programs and data. However, the secondary storage management also works with storage devices, such as USB flash drives and CD/DVD drives. Programs like assemblers and compilers are stored on the disk until it is loaded into memory, and then use the disk is used as a source and destination for processing.

Functions of Secondary storage management

Here are some major functions of secondary storage management in the operating system:

- Storage allocation

- Free space management

- Disk scheduling

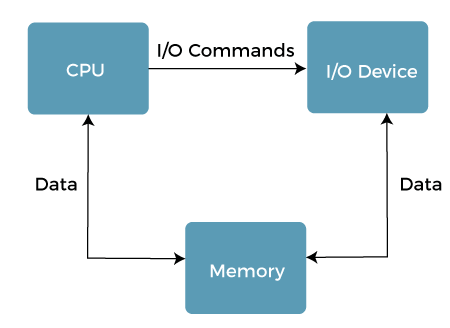

I/O Device Management

One of the important use of an operating system that helps to hide the variations of specific hardware devices from the user.

Functions of I/O management

The I/O management system offers the following functions, such as:

- It offers a buffer caching system

- It provides general device driver code

- It provides drivers for particular hardware devices.

- I/O helps you to know the individualities of a specific device.

Security Management

The various processes in an operating system need to be secured from other activities. Therefore, various mechanisms can ensure those processes that want to operate files, memory CPU, and other hardware resources should have proper authorization from the operating system.

Security refers to a mechanism for controlling the access of programs, processes, or users to the resources defined by computer controls to be imposed, together with some means of enforcement.

For example, memory addressing hardware helps to confirm that a process can be executed within its own address space. The time ensures that no process has control of the CPU without renouncing it. Lastly, no process is allowed to do its own I/O to protect, which helps you to keep the integrity of the various peripheral devices.

Security can improve reliability by detecting latent errors at the interfaces between component subsystems. Early detection of interface errors can prevent the foulness of a healthy subsystem by a malfunctioning subsystem. An unprotected resource cannot misuse by an unauthorized or incompetent user.

SYSTEM CALL the interface between the process and an operating system is provided by system call. when a program in user mode require access to hardware devices resources it must ask the kernel to provide the access to that resources this is done via something called a system call. when a program makes a system call the mode is switched from user mode to kernel mode. this is call context switching.

There is multiple type of system call

- Process control

- device management (hardware device)

- file management

- information maintenance

- communication

Definition of Process

Basically, a process is a simple program.

An active program which running now on the Operating System is known as the process. The Process is the base of all computing things. Although process is relatively similar to the computer code but, the method is not the same as computer code. A process is a "active" entity, in contrast to the program, which is sometimes thought of as some sort of "passive" entity. The properties that the process holds include the state of the hardware, the RAM, the CPU, and other attributes.

Process Control Block

An Operating System helps in process creation, scheduling, and termination with the help of Process Control Block. The Process Control Block (PCB), which is part of the Operating System, aids in managing how processes operate. Every OS process has a Process Control Block related to it. By keeping data on different things including their state, I/O status, and CPU Scheduling, a PCB maintains track of processes.

Now, let us understand the Process Control Block with the help of the components present in the Process Control Block.

A Process Control Block consists of :

- Process ID

- Process State

- Program Counter

- CPU Registers

- CPU Scheduling Information

- Accounting and Business Information

- Memory Management Information

- Input Output Status Information

Now, let us understand about each and every component in detail now.

1) Process ID

It is a Identification mark which is present for the Process. This is very useful for finding the process. It is also very useful for identifying the process also.

2) Process State

Now, let us know about each and every process states in detail. Let me explain about each and every state

i) New State

A Program which is going to be taken up by the Operating System directly into the Main Memory is known as a New Process State

ii) Ready State

when a process is ready to execute this is call ready state

iii) Running State

when a process is executing. this call running state of the process

iv) Waiting or Blocking State

when a process wait for anything like for i/o devices. then that state of the process is called waiting or blocking state of the process.

v) Terminated State

when a process complete his execution that state of the process is called terminated state of the process.

3) Program Counter

Program counter stored the information/address about the next process.

4) CPU Registers

When the process is in a running state, here is where the contents of the processor registers are kept. Accumulators, index and general-purpose registers, instruction registers, and condition code registers are the many categories of CPU registers.

5) CPU Scheduling Information

It is necessary to arrange a procedure for execution. This schedule determines when it transitions from ready to running. Process priority, scheduling queue pointers (to indicate the order of execution), and several other scheduling parameters are all included in CPU scheduling information.

6) Accounting and Business Information

The State of Business Addressing and Information includes information such as CPU use, the amount of time a process uses in real time, the number of jobs or processes, etc.

7) Memory Management Information

The Memory Management Information section contains information on the page, segment tables, and the value of the base and limit registers. It relies on the operating system's memory system.

8) Input Output Status Information

This Input Output Status Information section consists of Input and Output related information which includes about the process statuses, etc.

Process scheduling: os maintain all the PCB in process scheduling queue.

os maintain a separated queue for each process state and pcb of all process in the same execution state are placed in the same queue.

when the state of a process is changed, its pcb is unlinked from its current queue and move to is new state.

Job queue... this queue keeps all the process in the system

ready queue this queue keeps a set of all process residing in main memory's, ready and waiting to execute a new process is always out in this queue.

device queue.. the processes which are blocked due to unavailability of an i/o devices constitute this queue.

Scheduling of processes/work is done to finish the work on time. CPU Scheduling is a process that allows one process to use the CPU while another process is delayed (in standby) due to unavailability of any resources such as I / O etc, thus making full use of the CPU. The purpose of CPU Scheduling is to make the system more efficient, faster, and fairer.

Whenever the CPU becomes idle, the operating system must select one of the processes in the line ready for launch. The selection process is done by a temporary (CPU) scheduler. The Scheduler selects between memory processes ready to launch and assigns the CPU to one of them.

Arrival Time, time at which the process arrives in the ready queue.

completion time, time at which process complete its execution

burst time, time required by a process for cpu execution

waiting time, waiting time is the sum of the period spent waiting in the ready queue

turn around time, it is the total time process in system

TAT= Waiting time+ burst time

CPU utilization: cpu should be kept as busy as possible. in real system, cpu utilization should range from 40%(for a lightly loaded system) to 90%(heavily loaded system)

Real-time systems are systems that carry real-time tasks. These tasks need to be performed immediately with a certain degree of urgency. In particular, these tasks are related to control of certain events (or) reacting to them. Real-time tasks can be classified as hard real-time tasks and soft real-time tasks.

A hard real-time task must be performed at a specified time which could otherwise lead to huge losses. In soft real-time tasks, a specified deadline can be missed. This is because the task can be rescheduled (or) can be completed after the specified time,

In real-time systems, the scheduler is considered as the most important component which is typically a short-term task scheduler. The main focus of this scheduler is to reduce the response time associated with each of the associated processes instead of handling the deadline.

If a preemptive scheduler is used, the real-time task needs to wait until its corresponding tasks time slice completes. In the case of a non-preemptive scheduler, even if the highest priority is allocated to the task, it needs to wait until the completion of the current task. This task can be slow (or) of the lower priority and can lead to a longer wait.

Threads in Operating System (OS)

A thread is a single sequential flow of execution of tasks of a process so it is also known as thread of execution or thread of control. There is a way of thread execution inside the process of any operating system. Apart from this, there can be more than one thread inside a process. Each thread of the same process makes use of a separate program counter and a stack of activation records and control blocks. Thread is often referred to as a lightweight process.

Types of Threads

In the operating system, there are two types of threads.

- Kernel level thread.

- User-level thread.

User-level thread

The operating system does not recognize the user-level thread. User threads can be easily implemented and it is implemented by the user. If a user performs a user-level thread blocking operation, the whole process is blocked. The kernel level thread does not know nothing about the user level thread. The kernel-level thread manages user-level threads as if they are single-threaded processes? Examples: Java thread, POSIX threads, etc.

Kernel level thread

The kernel thread recognizes the operating system. There is a thread control block and process control block in the system for each thread and process in the kernel-level thread. The kernel-level thread is implemented by the operating system. The kernel knows about all the threads and manages them. The kernel-level thread offers a system call to create and manage the threads from user-space. The implementation of kernel threads is more difficult than the user thread. Context switch time is longer in the kernel thread. If a kernel thread performs a blocking operation, the Banky thread execution can continue. Example: Window Solaris.

Unit 3

Critical Section Problem in OS (Operating System)

Critical Section is the part of a program which tries to access shared resources. That resource may be any resource in a computer like a memory location, Data structure, CPU or any IO device.

The critical section cannot be executed by more than one process at the same time; operating system faces the difficulties in allowing and disallowing the processes from entering the critical section.

The critical section problem is used to design a set of protocols which can ensure that the Race condition among the processes will never arise.

In order to synchronize the cooperative processes, our main task is to solve the critical section problem. We need to provide a solution in such a way that the following conditions can be satisfied.

Types of Semaphores

There are two main types of semaphores i.e. counting semaphores and binary semaphores. Details about these are given as follows −

- Counting Semaphores

These are integer value semaphores and have an unrestricted value domain. These semaphores are used to coordinate the resource access, where the semaphore count is the number of available resources. If the resources are added, semaphore count automatically incremented and if the resources are removed, the count is decremented.

- Binary Semaphores

The binary semaphores are like counting semaphores but their value is restricted to 0 and 1. The wait operation only works when the semaphore is 1 and the signal operation succeeds when semaphore is 0. It is sometimes easier to implement binary semaphores than counting semaphores.

Synchronization Problems

These problems are used for testing nearly every newly proposed synchronization scheme. The following problems of synchronization are considered as classical problems:

1. Bounded-buffer (or Producer-Consumer) Problem,

2. Dining-Philosophers Problem,

3. Readers and Writers Problem,

4. Sleeping Barber ProblemThese are summarized, for detailed explanation, you can view the linked articles for each.

Bounded-Buffer (or Producer-Consumer) Problem

The Bounded Buffer problem is also called the producer-consumer problem. This problem is generalized in terms of the Producer-Consumer problem. The solution to this problem is, to create two counting semaphores “full” and “empty” to keep track of the current number of full and empty buffers respectively. Producers produce a product and consumers consume the product, but both use of one of the containers each time.

Readers and Writers Problem

Suppose that a database is to be shared among several concurrent processes. Some of these processes may want only to read the database, whereas others may want to update (that is, to read and write) the database. We distinguish between these two types of processes by referring to the former as readers and to the latter as writers. Precisely in OS we call this situation as the readers-writers problem. Problem parameters:

- One set of data is shared among a number of processes.

- Once a writer is ready, it performs its write. Only one writer may write at a time.

- If a process is writing, no other process can read it.

- If at least one reader is reading, no other process can write.

- Readers may not write and only read.

Sleeping Barber Problem

- Barber shop with one barber, one barber chair and N chairs to wait in. When no customers the barber goes to sleep in barber chair and must be woken when a customer comes in. When barber is cutting hair new customers take empty seats to wait, or leave if no vacancy. This is basically the Sleeping Barber Problem.

- Mutual exclusion

- Hold and wait

- No preemption

- Circular wait

- Deadlock ignorance: just ignore the the deadlock. for example if your system is hanging and you just ignore that and restart your system

- Deadlock prevention: mutual exclusion, hold and wait, no preemption circular wait.

- Deadlock avoidance: banker algorithm in safe method.

- Deadlock detection and recovery : detect the deadlock and recover from it(resource allocation graph)

- It is a form of resource management to computer memory.

- The essential requirement of memory management is to provide the ways to dynamically portions of memory to programs at their request, and free it for reuse when no longer needed.

- allocate and deallocate memory before and after process execution

- keep track of used memory used space by processes

- to minimize fragmentation issues

- to proper utilization of main memory

- to maintain data integer while executing of process

- It is also known as a virtual address

- It is a address generated by cpu during program execution

- This address is used as a reference to access the physical memory located by cpu.

- It is a actual address in main memory where data is stored

- physical address are used by the memory management unit (MMU) to translate logical address to physical address

- contiguous memory management: In contiguous memory managements scheme, each program occupies a single block of storage location such as a set of memory locations with consecutive address

- single contiguous: In this scheme the main memory is divided into two contiguous areas or partitions. the os reside permanently one partition, generally at the low memory and the user process is loaded into the other partition.

--single implement

--easy to manage and design

--in this scheme once a process is loaded it is given full processor's time other processor will interrupt.

- multiple contiguous: In this os need to divided the available main memory into multiple part to load multiples processes into the main memory.

- Non-contiguous memory management: Non contiguous allocation, also known as dynamic or linked allocation. In this technique, each process is allocated a series of non-contiguous blocks of memory that can be located anywhere in the physical memory. there is no loss of memory in the allocation.

- paging: is a storage mechanism used in os to retrieve process from secondary storage to the main memory as pages.

- segmentation : is a memory management technique in which the memory divided into the variable size parts. each part is known as a segment which can be allocated to a process.

Benefits of Disk management in OS

1. Increased Efficiency

2. Improved Performance

3. Better Security

4. Easier Backup

What is Swap-Space Management?

Swap-space management is another low-level task of the operating system. Virtual memory uses disk space as an extension of main memory. Since disk access is much slower than memory access, using swap space significantly decreases system performance. The main goal for the design and implementation of swap space is to provide the best throughput for the virtual memory system.

Stable-Storage Implementation:

To achieve such storage, we need to replicate the required information on multiple storage devices with independent failure modes. The writing of an update should be coordinate in such a way that it would not delete all the copies of the state and when we are recovering from a failure we can force all the copies to a consistent and correct valued even if another failure occurs during the recovery. In this article we will discuss how to cover these needs.

File concept

The operating system can provide a logical view of the information stored in the disks, this logical unit is known as a file. The information stored in files is not lost during power failures.

A file helps to write data on the computer. It is a sequence of bits, bytes, or records, the structure of which is defined by the owner and depends on the type of the file.

What is directory structure?

The directory structure is the organization of files into a hierarchy of folders. It should be stable and scalable; it should not fundamentally change, only be added to. Computers have used the folder metaphor for decades as a way to help users keep track of where something can be found.

What is Protection?

Protection refers to a mechanism which controls the access of programs, processes, or users to the resources defined by a computer system. We can take protection as a helper to multi programming operating system, so that many users might safely share a common logical name space such as directory or files.

Need for Protection:

- To prevent the access of unauthorized users

- To ensure that each active programs or processes in the system uses resources only as the stated policy

- To improve reliability by detecting latent errors

Advantages of system protection in an operating system:

- Ensures the security and integrity of the system

- Prevents unauthorized access, misuse, or modification of the operating system and its resources

- Protects sensitive data

- Provides a secure environment for users and applications

- Prevents malware and other security threats from infecting the system

- Allows for safe sharing of resources and data among users and applications

- Helps maintain compliance with security regulations and standards

Disadvantages of system protection in an operating system:

- Can be complex and difficult to implement and manage

- May slow down system performance due to increased security measures

- Can cause compatibility issues with some applications or hardware

- Can create a false sense of security if users are not properly educated on safe computing practices

- Can create additional costs for implementing and maintaining security measures.

Comments

Post a Comment